Introducing Fin: Intercom’s breakthrough AI chatbot, built on GPT-4

The arrival of OpenAI’s ChatGPT transformed everything – the huge implications for customer service were immediately obvious.

We quickly launched a collection of AI features for our Inbox, applying this technology to deliver efficiency gains. But the number one thing we were asked was “Can ChatGPT just answer my customer questions?” It was just so clear that it was better at understanding natural language than anything we’d seen before.

Unfortunately, our initial explorations showed that hallucinations were too big of a problem. GPT-3.5, which powered ChatGPT, was just too prone to making things up when it didn’t know the answer.

But the recent announcement of GPT-4 has changed things – this model is designed to reduce hallucinations. We’re excited to share what we’ve built with GPT-4 in our early testing.

Introducing our new AI chatbot: Fin

Today we’re announcing that we’ve built an AI-powered customer service bot that has the benefits of this new technology, and is suitable for business needs. It’s called Fin, and we believe it has the capacity to become a valuable partner to your support team.

When we started experimenting with building a GPT-powered chatbot, we had a number of design goals in mind. We wanted to build a bot that could:

- Converse naturally, using GPT technology.

- Answer questions about your business, using information you control.

- Reduce hallucinations and inaccurate responses to acceptable levels.

- Require minimal configuration and setup.

We believe that we’ve achieved this. Our new AI chatbot works “out of the box”, instantly reducing your support volume and resolution times.

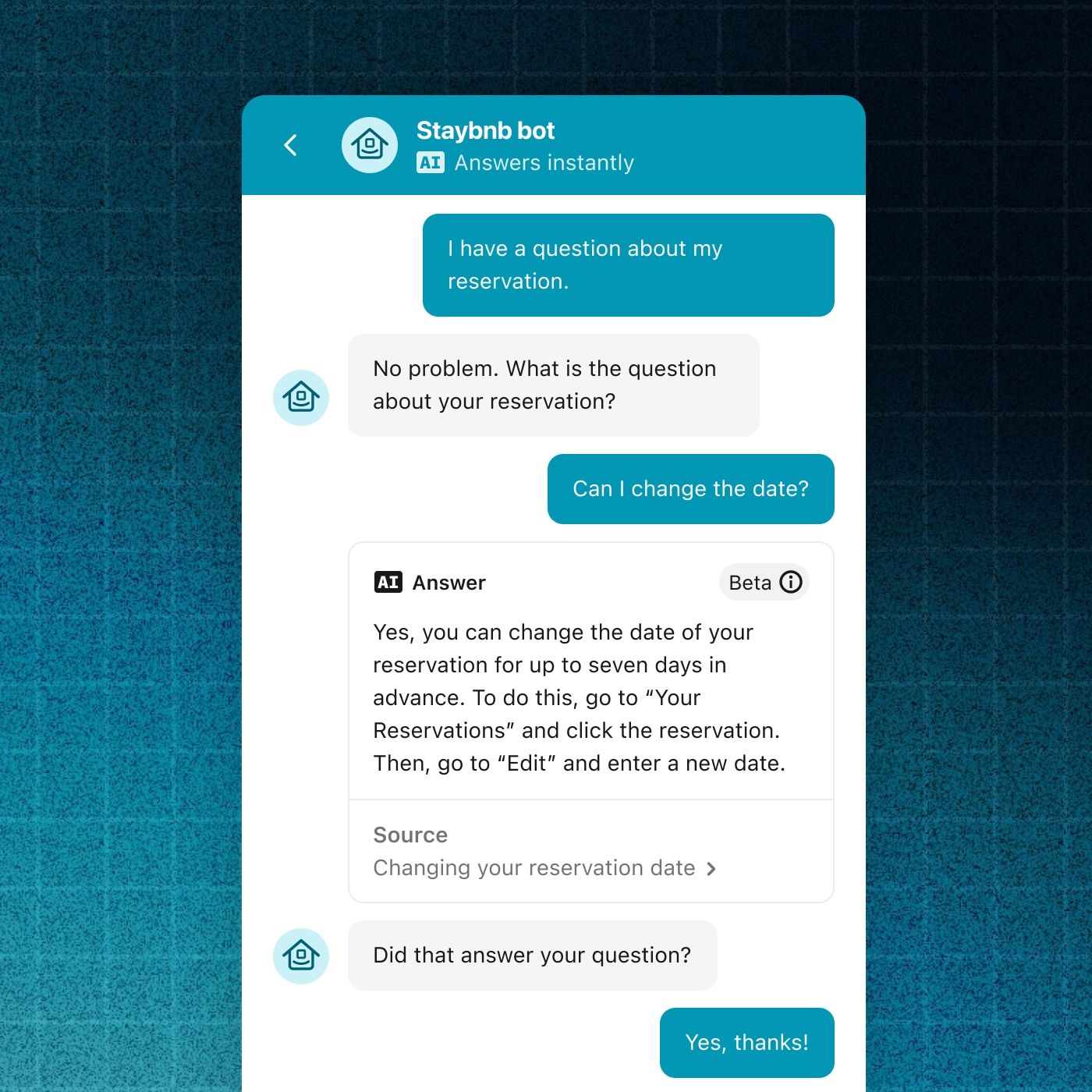

It can converse much more naturally about support queries than existing bots – bringing the natural conversation ability of modern AI to customer service.

It can understand queries that span across multiple conversation turns, allowing your customers to ask follow-up questions, and get additional clarification.

Fin engages in natural conversations with customers

We have extended GPT-4 with features and safeguards specifically tailored for providing customer support, where trust and reliability are crucial.

Fin refuses to respond to out-of-domain questions

Fin is designed to only provide answers based on content in your existing help center, increasing accuracy and trustworthiness. To reinforce that sense of trust, it always links to its source articles, so your customers can validate responses.

It’s not perfect, as we’ll discuss below, but we believe it’s now ready for many businesses.

Fin requires virtually no initial setup time. It ingests the information in your existing Intercom or Zendesk help center – using the power of AI to immediately interpret complex customer questions, and apply your help center knowledge to answer them.

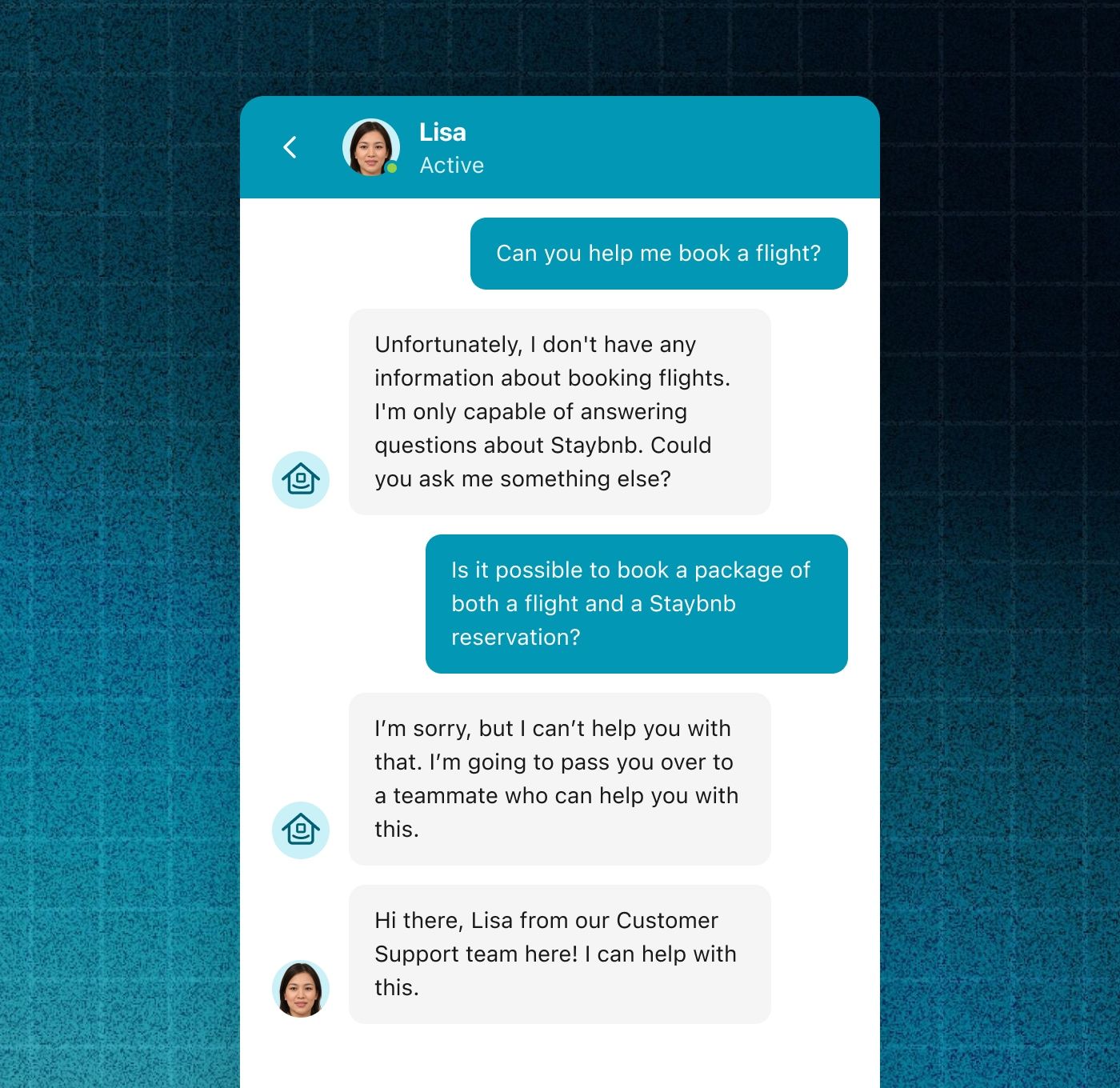

Inevitably, the chatbot won’t be able to answer all customer queries. In those situations, it can pass harder questions to human support teams seamlessly. It works effectively as part of the customer service team, using all the workflows that Intercom enables.

If Fin can’t answer a question, it can seamlessly pass over to a CS rep who can

How we built this AI chatbot

In order to create Fin, we combined the existing technology behind our Resolution Bot with OpenAI’s new GPT-4.

We launched Resolution Bot more than four years ago, and it works very well when set up, but it requires “Answers” or “Intents” to be manually curated. Unfortunately, we know that some customers struggle to get over this initial hurdle.

“We designed Fin to run using the knowledge that already exists in your help center”

For our new bot, we wanted to reduce this friction.

So we designed it to run using the knowledge that has already been created for your help center. It will consume your help center articles, and use the information to answer customer questions directly.

When asked a question, the chatbot will then use its AI to rephrase the information from the article into a natural sounding answer, automatically. And, when you update an article, the bot’s reply will update almost immediately. This means you only have to maintain your help content in one place, and the most up-to-date version can be served to customers automatically.

This provides a way for businesses to actually leverage the benefits of modern AI, in a safe and predictable manner, and using their existing processes around creating help center content.

In future, we plan to go further, and integrate our other machine learning technology to help the AI bot answer questions using the first-party data you have about your customers.

Making a trustworthy chatbot

We designed this chatbot to answer questions solely using help content you have already created to avoid the risk of inaccurate or unexpected responses. This gives you a high degree of control over what Fin can say.

“Some other GPT bots base their answers on massive amounts of information from the web, but actually constraining the information the bot can use radically increases its predictability and trustworthiness”

If someone asks a question that isn’t covered in your help center, it will say it doesn’t know the answer. This is an important feature. Some other GPT bots base their answers on massive amounts of information from the web, but actually constraining the information the bot can use radically increases its predictability and trustworthiness.

We have further mitigated inaccuracy by designing a new user interface for Fin with trustworthiness in mind – it clearly links to the source article when an answer is given, which lets the user verify if the source is relevant, reducing the impact of minor errors.

Fin will always provide a source for its responses, allowing customers to verify its answers

We have also taken measures to reduce the user’s ability to get the bot to dialog on any topic that is not contained in the help center.

Limitations

Accuracy

It may be possible for end users to intentionally work around the constraints we have designed and make the AI chatbot say inappropriate things. If this does occur, we believe it will only be after determined attempts, rather than in natural interactions with customers.

We must point out that the AI is not perfect. There are some customers for whom any risk of delivering irrelevant or incorrect information is unacceptable. They may not want to adopt this AI-powered chatbot just yet.

Large language models currently have a different failure mode than previous bots we’ve built, such as Resolution Bot. While Resolution Bot may sometimes deliver an irrelevant answer, our AI bot may sometimes deliver an incorrect answer. For example, it might get confused by the content of an article and make a statement to the user that is not true. While this chance has much reduced with recent models, it is not zero.

To enable you to quantify this limitation, we are building an experience where you can import your existing help center into the bot and manually test it.

“We believe that these models will continue to improve, and that accuracy will improve as a result”

Overall, with recent improvements to GPT-4 and the design constraints we have built, we are confident that its performance is above the accuracy bar required by many businesses.

Looking forward, we believe that these models will continue to improve, and that accuracy will improve as a result. We also believe that user understanding of the limitations of this technology will grow over time; our AI bot responses are clearly labeled as “AI” to enable this.

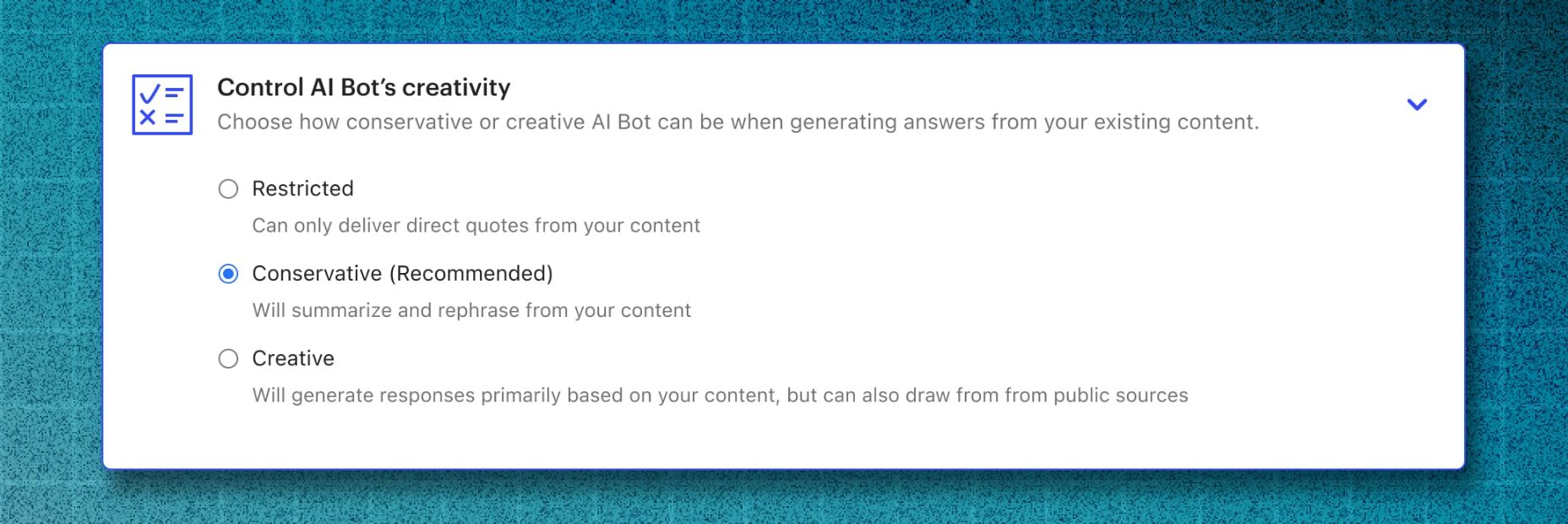

Finally, we believe that different businesses will naturally have different tradeoffs in this area. To that extent, we are building configuration settings that allow businesses to set the tradeoff they want to make, as can be seen in this design prototype.

We are exploring configuration settings to control Fin’s level of creativity

Cost of GPT models

It’s important to note that the large language models used to power this chatbot are currently expensive to run. This is cutting-edge technology that requires a lot of compute power. However, we believe this expense is likely to reduce over time.

We are confident that for many businesses, implementing our AI chatbot will be ROI-positive, compared to having support representatives answer the same questions.

Latency

Finally, advanced large language models such as GPT-4 have inherent latency. This can be seen when interacting with the bot – 10 or more seconds of latency is not uncommon. We also expect that this will improve over time.

How soon will Fin be available?

We have built an initial version of Fin, which we have been using for internal testing so far, and will be rolling out to a limited beta soon. At the moment, our main constraints are cost and pricing.

We are still continuing to iterate and polish the user experience based on testing, and to iterate on the integration between Fin and Resolution Bot. We believe advanced customers will continue to use Resolution Bot and similar technology, especially in situations where an action must be taken to fully resolve the user’s query – such as canceling an order, for example.

“At Intercom, we have long been building towards a future where most customer conversations are successfully resolved without needing human support”

We expect to move through the testing process rapidly in the coming weeks and months. For reference, the GPT-powered Inbox AI features we announced at the end of January are now available to all of our customers, and already in use by thousands of them.

Sign up for the future of customer service

At Intercom, we have long been building towards a future where most customer conversations are successfully resolved without needing human support, freeing up your team to work on higher value customer conversations.

We believe today’s announcement marks a paradigm shift in the field of customer service – the future is happening right now.

You can learn more about the new AI-powered bot and sign up for the waitlist here.