Conversational design for better products

Main illustration: Gabi Zuniga

We all know what a conversation between two people sounds like.

“Hey, can you help me a sec?”

“Sure. What’s up?”

“I just can’t make sense of this dashboard.”

Human conversation is a sequence of verbal exchanges – what linguists call turn-taking. This rhythmic exchange is deeply hardwired into our social neurophysiology, as call-and-response bonding in animals is likely hundreds of millions years old. And as humans, we carry our hardwired back-and-forth expectations into our interactions with computers.

There’s a lot of discussion around conversational computing these days – at Intercom, we talk about our customer communications platform, conversational support and conversational marketing. But what makes software conversational? The way it sounds, or something deeper?

This piece outlines a process for building and structuring effective conversational experiences in software. Along the way, we’ll explore how conversational UX – cooperative exchanges of inputs and outputs – closes gaps between products and users.

Overcoming gaps in understanding

Paul Grice, a 20th century philosopher of language who studied how conversation works, is a perennial touchstone for teams working in AI, linguistic engineering, and conversational design. Deservedly so. One of Grice’s key insights is what he called the cooperative principle. Simply put, it’s the idea that a conversation is a collaborative process aimed at forming meaning by overcoming gaps in understanding.

“In human interactions, we instinctively work together to close the gaps in meaning inherent in conversation”

In human interactions, we instinctively work together to close the gaps in meaning inherent in conversation. That process still fails a good bit, but most of us know to repair confusion quickly with follow-up questions. And beyond knowing when to disambiguate, we excel at making contextual inferences. So when my child asks, “May I have ice cream?” and I say, “It’s not even lunchtime yet!,” she understands that I’m saying no. Whether she accepts that communication is a separate issue.

In software, gaps in understanding can be relatively small (“How do I filter by location?”) or fairly foundational (“Are ‘apps’ different from ‘integrations’?”). Especially for these bigger understanding gaps, you’ll need to design conceptual frameworks and systems that work for your users. Ask yourself, “How do I bridge users’ existing mental models and the product itself?”

To figure out the big and small gaps your conversational UX needs to bridge, start with all the standard things: deeply research users’ problems, understand the competitive landscape, figure out the constraints, align the work with the larger company strategy, and so on. Then define and document the parameters of your cooperative conversational experience – you can use something like the following “job story” formula.

As [user], I’m partnering/collaborating with [product/product area/flow] to [job]. We’re in dialogue about [explicit or implicit user signal].

This can be a broad formulation, typically for early-stage work. For instance: “As a teen with an anxiety disorder, I’m partnering with a new mental wellness chat app to feel better over time. We’re in dialogue about my ups and downs.” Or it can be more granular, for targeted parts of a larger experience: “As a student with test anxiety, I’m partnering with Balm’s breathing coach feature to bring my body back into regulation. We’re in dialogue about how I’m doing moment by moment.”

Be sure you’ve clearly understood who’s in dialogue (ex: specific user segments and your in-product brand personality), the parameters of the interaction, and the job they’re cooperating on.

Structure conversations through turn-taking

Next, to get a sense of the inputs that will drive the conversation, list the decisions the user will need to make in this experience. You’ll quickly discover that sequence often matters. Pay attention to the implications of sequencing one user decision – that is, one conversational turn – before another.

“As you work on sequencing, don’t forget to pay attention to the conversational rules that you probably follow intuitively in every day chats with friends”

We’ve all encountered conversational problems in the wild and online. As you work on sequencing, don’t forget to pay attention to the conversational rules that you probably follow intuitively in every day chats with friends. For the conversationally challenged, Grice elaborated his cooperative principle with rules of engagement. Keeping those rules in mind, we can solve four common UX design problems:

- Turn-taking problems. In human conversations: too much context or not enough, like over-explanations or terse replies. In UX, don’t present users with a wall of text. But also: don’t leave off labels, explanations, hint text, and progressive disclosure.

- Trustworthiness problems. Lies, misrepresentations, exaggeration. In UX, avoid this by aligning with legal best practices — such as avoiding words like “always” and “never” — and making sure you truly understand the logic that you’re describing.

- Focus problems. Digressions and non-sequiturs. Prevent focus problems in your software by not asking or telling the user something that isn’t relevant to them at that moment. If a SaaS user is only using 3 seats on a plan capped at 10 seats, don’t ask her to upgrade yet.

- Delivery problems. Inarticulate, unclear, or ambiguous communications. To uplevel the nitty-gritty of your UX writing craft, turn to any number of excellent UX writing books. Obsessively collect screenshots of good UX writing — writing that sounds human. Imagine what it might’ve started out as. (One example to get you started: Netflix’s “Who’s watching?” could have been “Select a viewer.”)

Test it out

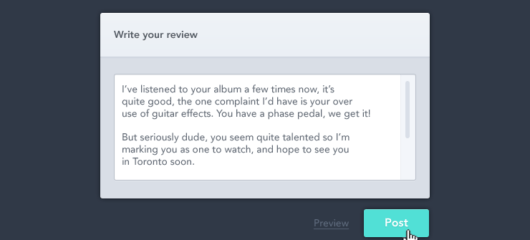

A best practice for refining conversational experiences are table reads (a common screenplay practice). Here’s how:

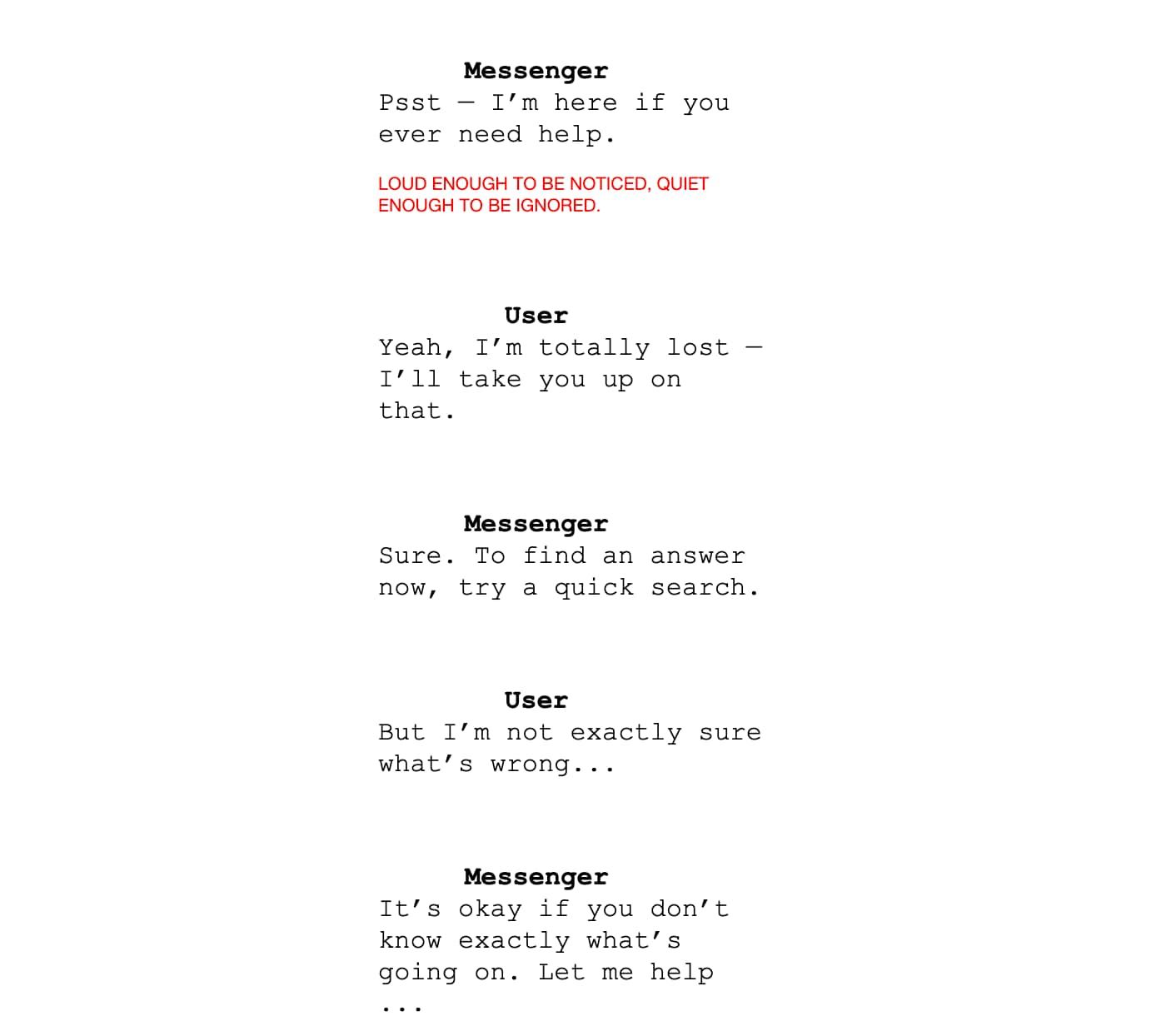

- Create a screenplay version of your interface, either as an exchange of UI copy and user inputs or as an imagined conversation between two characters. (This actually works for graphical interfaces, too; I’ve even done script versions of settings pages.) For forking conversational flows, script out a few different paths. Here’s an excerpt of a script I wrote during early explorations of bots for the Intercom Messenger:

- Run table reads with members of your team. One person plays the user and another plays the interface.

- Take notes. Is the flow logical and smooth? Are there any robotic-sounding places? Any places where the reader stumbled over the words? Be sure you’ve got at least one customer expert – someone from research or support, usually – observing and noting any terms, concepts, or sequences that might be stumbling blocks.

Watch out for moments when one reader (either the user or interface) is droning on too long. In your next iteration, consider solving this by interjecting graphical (read: non-conversational) elements into the larger conversational experience:

To gather a lot of input from the user all at once: Consider using a form. For instance, have your Messenger deliver a form within the larger conversational framework.

To communicate a lot of system information to seasoned users all at once: Consider using graphical approaches – charts, lists, information cards, maps, and so forth. Again, these can be embedded within a larger conversational UX.

UX design’s conversational turn

Scalable conversational design is technically feasible like never before. That’s a good thing for everyone. When conversational design practices mature alongside systems thinking, information architecture, visual craft, and a genuine hunger to better understand users, the software we build will be a lot better at its job: solving human problems.

“The North Star of conversational design is an intelligent system that’s truly and flexibly responsive to user signals”

The North Star of conversational design is an intelligent system that’s truly and flexibly responsive to user signals. A UI that listens more than lectures. After all, the more inputs the system can take in from the user, the more chances it – and the product team building it – gets to:

- Detect the user’s intention (system action: parsing new inputs)

- Confirm the intention (system action: showing the user that the system has registered inputs)

- Adjust to that input (system action: personalizing the experience)

- Correct misconceptions (system actions: offering proactive explanations or helpful error states)

When we allow users to respond to the system – to dismiss a suggestion, add a rating, customize a view, or any number of other inputs – the system can learn faster. For example, the system can “learn” that a given customer doesn’t need or use a subset of features, but really would benefit from one particular automation feature. Then it can make a meaningful interjection recommending that feature. An intelligent, targeted logic spares users the noise and dilution of unnecessary chatter, so the communications that matter stand out more.

And far beyond facilitating informational exchanges, building for rhythmic turn-taking has another, more human outcome. It lets your product be attuned – that is, emotionally appropriate and truly responsive – at every turn. And at every turn, the understanding gap between you and the user narrows. This is UX empathy in practice.