Is your engineering team experiencing alert fatigue? Ask these 8 questions

Main illustration: Kristin Raymaker

Alert fatigue is a common problem among engineering teams that handle operations and maintain infrastructure.

The problem usually stems from a haphazard approach to writing alerts as teams grow and begin using more infrastructure of increasing complexity. This is quite normal – as a company or a team grows, it often takes time for an observability culture and solid alerting practices to take shape.

It’s easy to create alerts that are too sensitive, too noisy, and too cautious. At the beginning, everything seems alert-worthy simply because it is better to be careful and maximize production signal in the early stages of a product.

“As the number and complexity of features and infrastructure grows, improving alerts is usually way down the priority list”

Naturally, this approach doesn’t scale very well, but as the number and complexity of features and infrastructure grows, improving alerts is usually way down the priority list. The result is lots of semi-meaningful alerts, noise, context-switching, and multitasking for the on-call engineer. In extreme cases, teammates burn out, alerts get ignored, and your on-call team goes from improving the quality of the service to constantly firefighting with no meaningful impact.

Like all companies, Intercom is not immune to these inefficiencies. So we devised a relatively lightweight process to fight alert fatigue.

How we think about alerting strategy

The concept of “alert discipline” is at the core of getting alerts under control. Like feature work, alerts and the alerting strategy should be approached in a deliberate, structured manner. Unlike feature work however, it’s not something that can be well planned in advance.

After all, it’s highly unlikely that you will be able to predict the operational health and noisiness of your new feature before you ship it. You have to monitor alerts in a regular, planned manner so that alerting toil doesn’t creep up to unsustainable levels.

8 questions to ask when assessing your team’s alerts

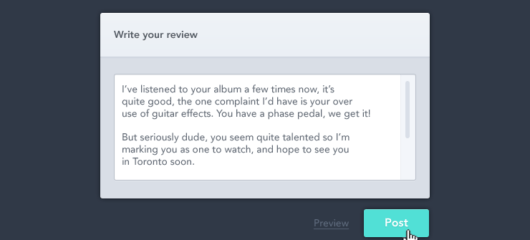

We introduced regular alert review sessions for teams dealing with frequent alerts. These initially took place once – or multiple times – per six-week engineering cycle, but became more spaced out as the state of the alerts and monitors improved to a more manageable level.

Each alert review session starts with an ordered list of alerts that fired in the previous period, ordered by frequency of their firing. We use the PagerDuty platform as our alert source, and its analytics features give us the information we need to break down alert frequency and response. The alerts that contribute most to the overall noise (i.e. fire most frequently) are reviewed first.

Each alert is then passed through a checklist of questions:

1. Is the alert still relevant?

Any alerts triggered by outdated or unmaintained systems should not be bothering the on-call engineer and should be immediately removed.

2. Is the alert actionable?

If an on-call engineer receives the alert, can they do something right now to fix or improve the underlying cause? If the alert is not actionable and useful it should be removed. A weekly summary of problems or performance degradations might be a better place for the information within the alert.

3. Is the information in the alert immediately useful even if not actionable?

We can divide alerting information into two broad categories.

- Signals: These alerts warn of a system operating at its limit, but don’t necessarily mean the service is being impacted. An example would be one of your servers running at 100% CPU. If the service is still operating fine, should on-call spend valuable time investigating? After all, your server is then just performing at the best work-for-cost ratio!

- Symptoms: These alerts fire when the customer experience is impacted. An example here would be the number of 5XX HTTP errors your service is returning to the callers.

These two categories work best in tandem. An on-call person should react and troubleshoot the symptoms, and look at signals only as a further source of information.

4. If the alert is actionable and paging, does it need to be dealt with immediately?

If the issue doesn’t need to be handled immediately, it shouldn’t wake anyone up in the middle of the night. The team should decide on alternative ways of surfacing the information in the alert in these situations, for example, a Slack or dashboard notification, or an opened task in an issue-tracking mechanism.

5. If it is actionable, is there a runbook or troubleshooting link? Are the steps clear enough to be followed by any engineer on the team?

One of the worst experiences of being an on-call engineer – especially a new one – is the amount of tribal knowledge that builds up in engineering teams over time. It’s intimidating to jump on a high-severity production incident just to realize that you’re unfamiliar with that area of the system and it’s not well documented.

“Keeping the alert troubleshooting information clear, simple, and up to date goes a long way towards reducing your mean time to mitigate and recover from an incident”

Even if you do have the expertise, you might have written the code so long ago that you don’t remember clearly what it’s supposed to be doing. Keeping the alert troubleshooting information clear, simple, and up to date goes a long way towards reducing your mean time to mitigate and recover from an incident (MTTM and MTTR).

6. If it is actionable, is there a dashboard link and does it show all known potential causes?

Dashboards are a great way to surface large amounts of system information at once, meaning engineers don’t have to dig into various logs, metrics and traces to figure out the cause of a problem. Aggregating data into a dashboard and providing the link as part of the alert allows for much faster troubleshooting.

7. Is the alert too sensitive or not specific enough? Would it benefit from desensitisation or scope change?

Many alerts are useful but wrongly calibrated. They could either be too broad and not firing every time they should, or too specific and firing several times for the same incident, which just adds to the noise.

8. Finally, does a human being have to do the remediation?

In a way, all computer code automates something that a human can do. So why not automate remediation of the issues triggering the alerts? While certain thornier alerts would be hard to automatically fix, such as a bug in the code or a performance problem in the system, automated actions can solve many common problems.

This is especially true if you are running on a cloud platform like AWS and can provision infrastructure without having to worry about requesting additional hardware. For example, if a node in your search cluster is showing failing disks, why not automatically replace it? If the service is low on compute resources due to traffic increase, why not scale up and add additional VMs or containers? Details of the remediation can then be sent to the team through non-alerting channels so they can review at their leisure.

Have you answered “no” to any of these questions?

Answering no to any of these questions raises a high-priority task for the team to do some work to improve the alert – whether that means stopping it from paging, improving troubleshooting steps, automating it, or simply fixing the underlying system problem.

The crux of the approach is doing this in a regular and planned fashion. It keeps the noise levels low and your ops and on-call engineers happy and productive. They can focus on improving quality and delivering impact instead of firefighting.

Are you interested in the way we work at Intercom? We’d love to talk to you – check out our open engineering roles.